When To Stop Your A/B Test - The Messy Reality Version

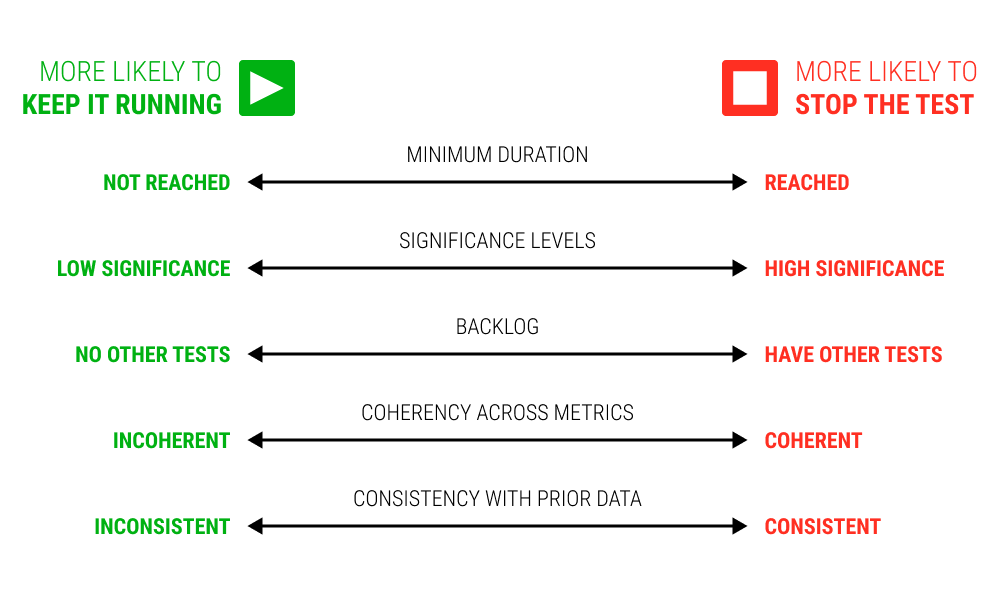

Experimentation practitioners want to stop their a/b test at the most optimal time. If an experiment runs too long, it delays potential improvements. On the other hand if it runs too short, we risk deciding incorrectly based on noisy results. As a result, most practitioners might take a reasonably simple approach and set a fixed testing duration or use a dynamic stop rule (ex: tied to some significance threshold). However as a practitioner myself, I find that other factors have crept up on real projects that made stopping decisions a little messier. And so, here are 5 criteria that I consider when deciding to stop an a/b test or keeping it running.

Minimum Duration

In some form or another, most a/b tests will probably start with a predefined minimum testing duration (ex: 1 or more weeks). This is done for a number of reasons. For one, we want to expose the experiment to enough of a sample size (ex: web site visitors) which of course provide us with statistical power to detect our effects (the more visitors, the greater the sensitivity and the lower effects we can detect). A minimum testing duration also gives our experiment a more representative time frame by covering at least a typical week, business cycle or cohort. And finally, a minimum testing duration protects us from deciding in the first minutes, hours or days of an a/b test that may look great at first, but might fade over time due to chance or lack of power. Taken together, if an a/b test reaches its minimum duration then we're more likely to stop it than not.

Significance

But not all experiments that show strong effects early on, fade. And here the level of significance can pressure us to decide and stop an experiment early - especially if it's a strong negative one. Practically speaking, the more significant the effect, the more likely it is that we'll stop it.

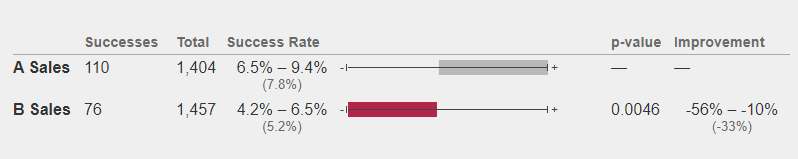

I admit I would not be the one to advise stopping an incomplete experiment that just scratches a symbolic p-value of 0.05 on its second day of testing. In practice, I've observed many such rather significance blips fade away to noise given the chance to run longer. However, I do want to share a real example of an experiment that I advised to stop early. I simply I did not feel comfortable running it longer due to its strong negative effect. The same experiment also had multiple metrics that were coherently reinforcing the same picture (more on that later).

Backlog

Having prebuilt a/b tests that are waiting in line to run, may provide additional pressure to simply move on from an a/b test that reaches its predefined duration. The opposite is also true. When we don't have any new a/b tests that waiting in queue, I've personally let active tests run longer instead of just stopping them due. This is especially true when tests are insignificant (with positive or negative effects). Sometimes not having any a/b tests running at all might be worse than letting an a/b test bake a while longer and achieve a clearer effect as it gains more data. Sometimes a/b tests show up insignificant not because they really have no effect, but rather, because they might have been underestimated with an inadequate sample or duration. And extra exposure may provide us with a clearer signal.

Coherency Across Metrics

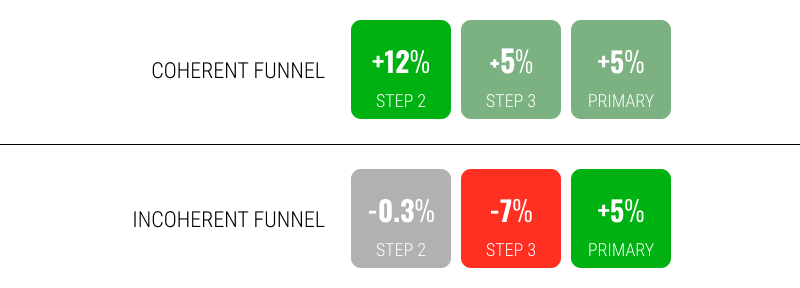

When we track multiple metrics, we gain the ability to see if an a/b test is coherent or not. Imagine running an experiment on a typical e-commerce product page with a number of steps (a funnel) before a customer makes a transaction. When we have data for all of these steps along with the final sales metric, we typically might expect some form of coherency (positive or negative) across the funnel. More specifically, if we introduced a positive change on the product page, we'd typically expect it to trickle through the steps before becoming visible in the final sales metric. When our metrics are coherent and explainable it makes us more comfortable stopping an experiment. If on the other hand we see incoherent metrics that might be hard to explain, it could be a sign of chance or noise acting on our experiment - hinting it might need more time.

Below is an example of this. The first set of metrics are more coherent. And personally, I've stopped and rolled out such a/b tests even if the final or primary metric wasn't significant but positive (with initial metrics being both significant and positive). The second example shows metrics that are all over the place. And this might not be adequate to stop and implement even if the primary metric is significant.

Consistency With Prior Data

Finally, our experiments may or may not be similar to results of other similar experiments ran in the past. When two ore more experiments are similar, this provides us with a stronger signal and greater confidence - and eases us to stop and decide. On the other hand, when two similar experiments have opposing effects, it could be a sign of an incomplete experiment. Of course, when opposing effects are equally trustworthy, powered, and highly significant, we also have to be ready to accept them for what they are.

What's Next

As I've listed these stopping considerations, I realize that I might have suggested that this is a manual human task. I'd like to be clear that this wasn't my intent. I do strongly believe that automating these stopping rules as an explicit algorithm is probably the most natural next step. I would not be surprised that a piece of code stop and decide on experiments more optimally than a human could.

Jakub Linowski on May 14, 2021

Jakub Linowski on May 14, 2021

Comments